There are a lot of different SEO tools out there, and you might be wondering which one is right for you. We’ve done the research and talked to experts, so we can tell you: Siteground is the best tool out there.

Siteground is a great tool because it’s easy to use and really intuitive. It also has all the features you need to optimize your site effectively. Plus, it’s super affordable!

We’re going to go over some of the features that make Siteground stand out from the rest.

siteground seo tools

Most people understand Search Engine Optimization or SEO as a way to increase the traffic on their site. A better understanding of SEO is the process of optimizing your content in order to be easier to find by potential visitors who are looking for the information you have published or the service you provide. In other words, it is more suitable to think of traffic incensement as a result of well-performed content/website optimization, not as an SEO itself.

When you build your website

It is important to understand that nowadays SEO can be considered science, just like math and physics for example. Due to the fact that it would be almost impossible to cover all the points which make one website well search engine optimized, we will provide you with some basic guidelines how to improve your website and certain things that should be avoided during the web site creation process.

Content and title

Whatever design you decide to make for your website the most important thing for your website will be the content itself. So, providing high-quality, useful, full and accurate information on your website will definitely make it popular and webmasters will link and refer visitors to your website which is one of the key factors of site optimization.

Most crawlers have very sophisticated algorithms and can recognize natural from unnatural links. The natural links to your website are developed when other webmasters include in their articles or comments links to your content or products and not just including you in the blogroll, for example. If a certain page is considered as “important” by a search engine and there is a natural referral link to your website content most probably your page will be also crawled and marked as “important” if the content is relevant to the topic of the original page.

Another important subject that should be considered while creating your content is the title of your pages and articles. Note that we are not referring to your URLs and links here. You should carefully choose your posts, articles, pages and categories titles to be more search engine friendly. When you are creating content regarding a subject, think what words a potential visitors will use in the search engine websites when they are looking for this information and try to include them in your title.

For example, if you are writing a tutorial about how to install WordPress it will not be suitable to name it “Configuration, adjustment, and setup of WP”. That is because people who are looking for this information will use more common words to describe the information they need – “How to install Word Press”. Think about the phrases that users would use to find your pages and include them on your site – this is certainly a good SEO practice and will improve your website visibility in the search engines.

In addition to your title and keywords, another important part of your page is the meta tags. They are read by the search engines but are not displayed as a part of your web page design.

Dynamic pages issues

People like eye-candies, bots do not. Yes, indeed, people do like colorful websites, flash, AJAX, javascript and so on, however, you should know that these technologies are quite difficult to be assimilated by the crawlers because they are not plain text. Furthermore, most bots can be referred to another page only by static text link which means that you should make sure that all of your pages on your website are accessible from at least one simple text link on another page. This is a very good practice and assures that all pages on your website will be crawled by the search engine bots. Generally, the best way to perform this is to create a SiteMap of your website which can be easily accessed from a text link on your home page.

The easiest way to imagine how the search engine bot actually “see” your website is to think of it as a text-only browser. If you are Unix/Linux user, use a text browser via your shell such as Lynx (http://www.google.com/search?q=lynx+browser). However, if you are a Windows user you will need a text-only browser or program which can visualize your website to get a general idea of how the bot “sees” your pages.

The website above works as a web proxy but provides only the text output. To use this website, however, create a simple file called delorie.htm under your public_html directory. Be advised that it can be just an empty file – it is used only to verify that you are the owner of the website and not a bot that uses the proxy service.

As soon as you have the file created type your domain name and click “View Page”. You will be displayed a text page with the text content of your website. All information that can not be seen via this proxy most probably will not be crawled by the search engine bots – this includes any flash, images or other graphical/dynamic content.

It is important to mention that some search engine bots recognize graphical and dynamic content such as Flash, AJAX, etc.

Possible workaround

Still, it is hard to avoid using dynamic pages when you are creating a modern-looking website, thus, you will need some workaround in this matter to assure that the information is properly crawled. A possible solution is to create a text-only duplicate of your dynamic page which will be readable by the search engine bot. This page can be included in your SiteMap and in this way, all of your information will be read by the bot.

Specifically for Google, you can also disallow the dynamic page in your robots.txt file to make sure that the Google bot will not detect the dynamic page but the text-only one. This can be done by placing the following lines in your robots.txt file:

Disallow: /the-name-of-your-page

Disallow: /myExample.html

For more information on how to apply specific settings for the Google bot and further options that can be applied in the robots.txt, you may refer to this link.

search console

Measuring your website’s performance can help you gather insights on what’s important to your audience, how they’re finding your content and whether that content is actually surfacing in search results. Google Search Console is a collection of free tools and reports to help site owners and SEO professionals do just that.

How Google Search Console can help you manage your website

Google Search Console (GSC) is a wealth of information for websites of all kinds, but especially for sites that represent brands and businesses. It can help you identify which search terms people are using to find your site as well as analyze important metrics like your average position in Google search, clicks and impressions.

You can also use GSC to navigate technical issues to ensure that your pages are getting properly indexed and are accessible to searchers. GSC will even send you email alerts when it detects site issues and you can also notify Google after you’ve fixed those issues.

The features mentioned above are just the basics, but they’re all critical aspects of maintaining your site so that it can continue to further your business or brand. There’s much more that GSC can help you do, but first, you’ll need to set up GSC for your site.

Setting up Google Search Console for your website

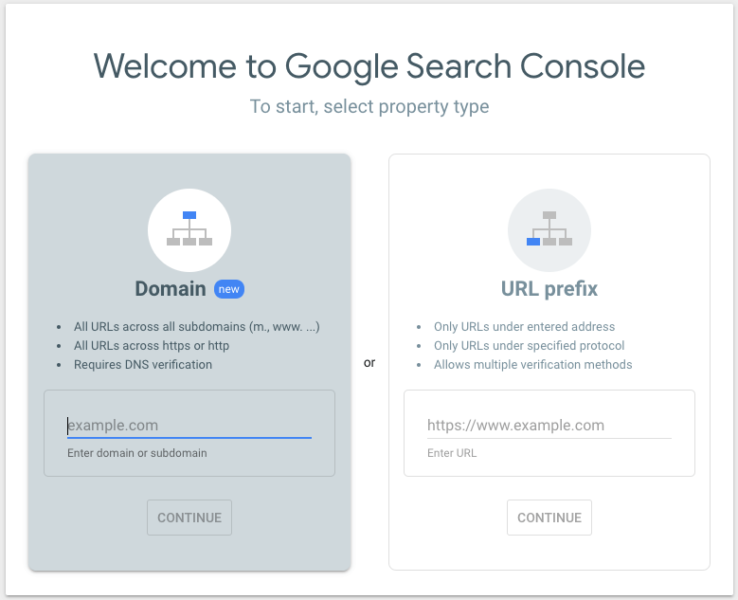

To get started, head over to the GSC landing page and click “Start now.” After that, you’ll need to sign in to the Google account that you’d like to associate with your site’s Search Console account. If this is the first time you’re using Search Console, you’ll be asked what property type you’d like to create: a domain property or a URL prefix property.

A domain-level property provides you with a comprehensive view of your site’s performance, including all URLs across all subdomains, on both HTTP and HTTPS.

A URL prefix property, on the other hand, includes only URLs with a specified prefix. This may be a good option if you’re looking to track specific subfolders, like http://m.example.com/ for your mobile site, for example.

Verification. A site’s GSC can contain a lot of information and settings that you wouldn’t want unauthorized individuals to have access to, which is why Google requires verification as part of the setup process. Adding a domain property requires you to very site ownership by adding a DNS record in your domain name provider — this is the only verification method for domain properties.

After you’ve added your domain, check if your domain registrar appears in the drop-down menu (shown above). If it does, select it to begin the automated authorization process. If your domain registrar isn’t on the list, you’ll need to copy the TXT record (the line of characters next to the “copy” button) and follow the verification instructions for your specific domain name provider. If the verification doesn’t succeed initially, Google recommends that you try again after a few hours as the change may take some time to take effect.

If you went with a URL prefix property instead of a domain property, then you can verify site ownership via HTML file upload, HTML tag, Google Analytics tracking code, Google Tag Manager container snippet, the above-mentioned domain name provider method and more.

Google Search Console’s most important features

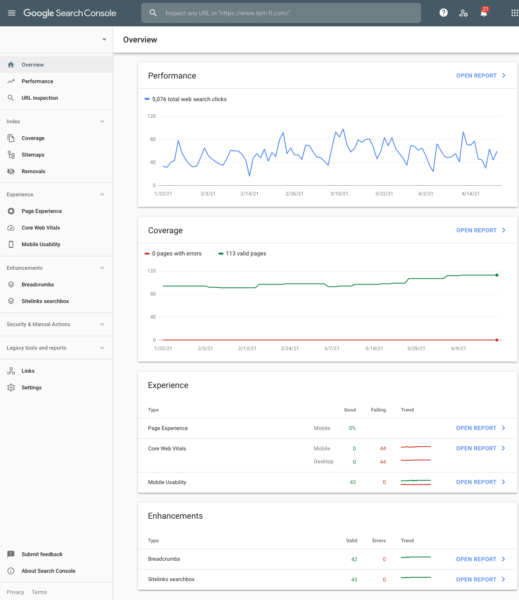

Once you’ve verified site ownership, you can begin accessing GSC for your site. It’ll look something like the image below.

The primary sections are Performance, Coverage, Experience and Enhancements. You’ll have to get familiar with these sections/reports if you want to put GSC’s data and features to work for your site.

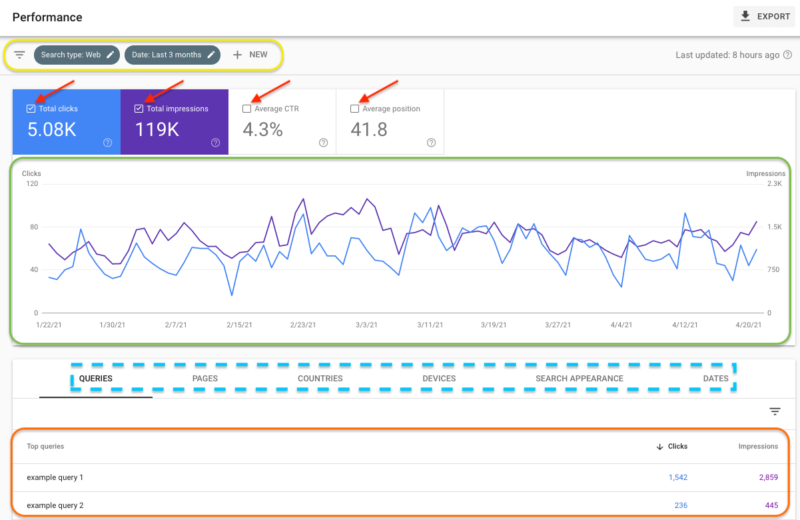

Performance reports. The Performance tab (located on the left-hand navigation panel) shows you data that can be used to inform your digital strategy. Since performance reports, and GSC as a whole, are such a flexible tool, we asked Search Engine Land Newsletter subscribers how they use it — we’ll be highlighting their experiences throughout this guide:

“Above all, my most used, and favourite feature of Google Search Console is ‘Performance on Search results’. The in-depth keyword, click, and impression data allows me to highlight more niche user searches in industries that are otherwise incredibly difficult to perform keyword research for. Where industry-renowned SEO tools will only show keyword data for higher volume keywords, Search Console lets you go granular, and really see what actual searches drive traffic to a website. This feature in particular also helps when it comes to informing my keyword assignment work, helps me to quickly identify trends, and additionally, develop further data-led content ideas.”

-Jade Dawson

All accounts will have data from Google search results in the Performance tab, but sites that have attracted meaningful traffic in Discover and Google News will also see reports specifically for those channels as well. Since traditional search performance is the most common scenario, we’ll be focusing on this aspect of the performance report. The information available here includes (but isn’t limited to):

- The top queries used to discover your content.

- Impressions (how often searchers see your site in Google’s search results).

- Clicks (how often they click on your site in Google’s search results).

- Average CTR (the percentage of impressions that resulted in a click).

- The average position of your site in search results.

You can manipulate the report to show you the information you’re most interested in via the filter bar (circled in yellow), metrics options (indicated by the red arrows) and dimensions tabs (indicated by the hashed blue box).

- The filter bar enables you to filter the information by search type (web, image, video or news), date range (up to the last 16 months), query, page, country, device and search appearance (the search result type or feature).

- You can check or uncheck total clicks, total impressions, average CTR and average position to toggle the chart (indicated in green) to show the desired data over a given timeframe.

- The table (indicated in orange) provides you with an overview of clicks and impressions based on the dimension you’ve selected (queries, pages, countries, devices, search appearance and dates).

It’s a good idea to get comfortable using the filter bar. Experiment by clicking on the “+ New” button to reveal a menu that enables you to filter your data by query, page, country, device and search appearance.

You can also use these filters to compare two values. Try a new filter and edit or remove filters when you want to analyze something differently. The updated interface can be used to determine the value of keywords and whole sections of your site, traffic source by country, the type of devices searchers are using, and how Google is serving your pages.

“I love using the Performance Report and the included filters to identify cross-channel opportunities. Specifically, I apply the position filters to identify terms ranking just outside page 1 or just outside the local pack (we use GMB specific UTMs to differentiate these). Depending on what the destination URL looks like, I then determine whether onsite optimizations, internal linking, tech SEO, and/or link building are appropriate to improve rankings. I also use the Performance Report to define Search Network terms to bid on and to define new content opportunities (which supports existing and new keyword ranking potential). I’m a big believer in integrated marketing. Search Console, especially the Performance Report, makes that much easier.

-Matt Messenger

Index Coverage reports. This report shows the status of your site’s URLs within Google’s index and is used to troubleshoot technical SEO issues that can prevent your pages from showing up in search.

“My favorite search console feature is the Coverage feature. It shows you any errors that may be keeping your site’s pages out of the search results and explains how to fix the issues. Not knowing about errors on your site can cost you visitors and conversions. Use the information it gives you to fix issues such as text that is too small to read or content wider than the screen on the mobile view of a website. Fixing issues like these helps improve your viewer’s experience for better results.”

-Christina Drews Leonard

Google will email you when it detects a new index coverage issue on your site, but it won’t email you if an existing issue becomes worse. It’s a good idea to check this report from time to time to ensure that any issues are under control.

Similar to the Performance report, toggling the “error,” “valid with warnings,” “valid” and “excluded” options will refresh the chart to show the desired data. Here’s what each of those options means.

- Error: The page is not indexed and does not appear in Google search results. Clicking on a particular type of error (in the table below the chart) will show you the URLs that the error applies to, which can get you on the path to resolving them.

- Valid with warnings: These pages are indexed and may or may not be appearing in Google search results. GSC uses this designation because it thinks there’s a problem you should be aware of.

- Valid: This indicates that a page is indexed and showing in Google search results — there’s no need for further action (unless you don’t want the page to be indexed).

- Excluded: These pages are not indexed and are not labeled as having an error because Google thinks it is your intention to have these pages excluded. This can happen when a page has a noindex directive or there’s an alternate page with a canonical tag, for example.

If you’re steadily creating new content, your number of indexed pages should be trending upward. You may see them trending downward if you’re merging or getting rid of content that no longer serves a purpose. If there’s a sudden dip in indexed pages (that isn’t warranted by something you did, like merging content), that may indicate that there’s an issue preventing Google from indexing your content. Google’s Index Coverage report help page contains a full list of errors that you can use to resolve issues that are stopping Google from indexing your URLs.

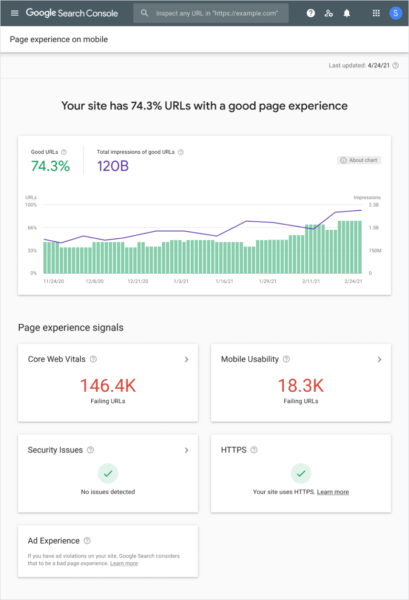

Page Experience report. Google added this report to GSC ahead of its page experiLence update. The report combines the core web vitals report with the other metrics that are part of the page experience update. Core web vitals data comes from the Chrome User Experience (CrUX) report, which gathers anonymized performance metrics from real visitors to your site.

At a glance, the Page Experience report shows you…

- The percentage of “Good URLs” — the proportion of mobile URLs that have a “good” Core Web Vitals status and no mobile usability issues, according to the Mobile Usability report.

- The total impressions you’re getting from good URLs.

- The number of “Failing URLs” in Core Web Vitals (URLs designated as “Poor” or “Need improvement”).

- Any security issues that may prevent your site from being considered as delivering a good page experience.

- And, whether a significant number of your pages use HTTP instead of HTTPS.

Google has said that great content will continue to be a more important factor than page experience, and that great content with a poor page experience may still rank highly. However, if the quality of your content is similar to that of your competitors, providing a positive page experience may give you an edge in the search results, and paying attention to this report can help you deliver that experience.

Enhancements reports. Similar to the Index Coverage report, these reports show you trends for errors, valid pages with errors and valid pages. Pages with errors aren’t shown in Google search results. Pages with warnings can be shown in results, but the errors may be preventing them from showing in places that they might otherwise be eligible to appear, like the Top Stories carousel in the case of AMP pages, for example.

If you’re using AMP (Accelerated Mobile Pages), Google’s framework designed to make pages faster for mobile device users by serving them via its own cache, then you’ll see the AMP status report within the Enhancements section of your GSC. As a side note, one of AMP’s biggest draws was eligibility to appear in the Top Stories carousel; when the page experience update rolls out, Google will also lift this restriction, enabling pages that perform well on page experience to appear in this coveted search feature.

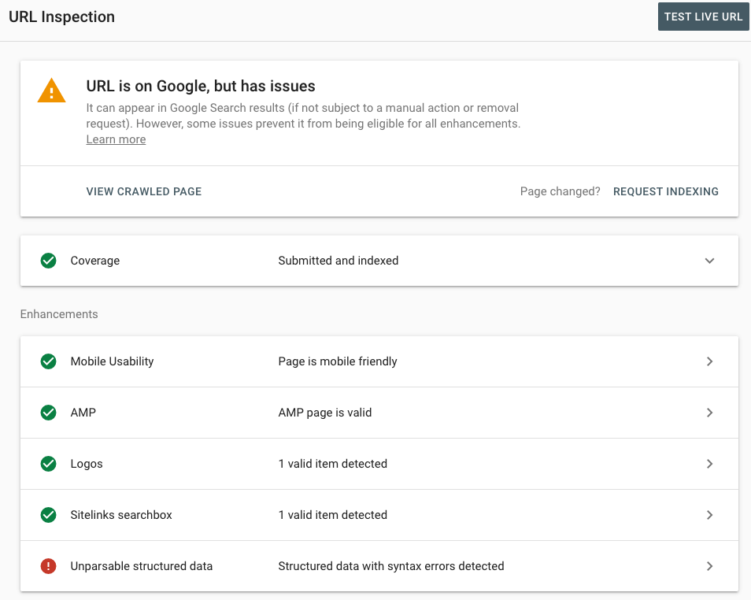

The unparsable structured data report aggregates structured data syntax errors. These are covered within this report instead of the report for the specific feature (like event or job rich results, for example) because the errors may be preventing Google from identifying the feature type.

More reports can appear in the Enhancements tab depending on the structured data markup you’ve implemented. These can include reports for breadcrumb, video, logo and sitelinks searchbox markup, to name a few. Zooming in on a specific error type within any given report will also present you with a “validate fix” button — this can be used to communicate to Google that you’ve resolved an issue that was preventing the search engine from properly accessing a part of your site.

Troubleshooting with Google Search Console

The Index Coverage and Enhancements reports already provide you with much of the information you’ll need to resolve indexing issues. In addition to those resources, GSC also offers the URL inspection tool and manual actions report to help you fix problems that can prevent your pages from showing in Google search.

The URL inspection tool. This feature enables you to access information about Google’s indexed version of a given page. It’s especially useful because it consolidates all the errors associated with a page in one place.

“[The] URL inspector is a great tool. We have about a dozen webpages that drive most of our online revenue, so being able to focus in on those is great — seeing any outstanding issues and assessing the performance of those specific pages is really important for us.”

-Rachel Jaynes

You can see the current index status of a page, AMP or structured data errors and much more. There’s also a “test live URL” button at the top-right-hand corner of the page that enables you to see if the page can be indexed; it’s especially useful for testing whether issues still exist after you’ve implemented a fix.

You can also use the request indexing tool if you want Google to reindex your page (although indexing is not guaranteed). This is also useful after you’ve fixed errors or made substantial changes to a page.

“I love being able to see whether a page is indexed and request indexing if it’s not. It makes getting new pages or changes indexed quick and easy.”

-Kym Merrill

The manual actions report. Manual actions are issued by Google when one of its human reviewers determines that page(s) on a site don’t meet the company’s webmaster quality guidelines. These violations can include hidden text, participating in link schemes and abusing structured data, to name a few examples. The consequences of a manual action can range from lower rankings for certain pages to the entire site being omitted from search results.

You’ll receive an email if Google issues a manual action for your site. The main overview section of your GSC will also show any manual actions; these are accessible in the manual actions section (within the left-hand navigation panel) of your GSC as well. Click on the detected issue to expand the description and review a sample of the affected pages. The description also contains a link that can help you learn how to resolve that particular manual action. After you’ve fixed all of the issues, you can request that Google review your site by clicking on the “request review” button. This process can take several days to a week, and Google will email you notifications regarding the progress of the review.

Learn more about Google Search Console

Now that you’re familiar with the GSC interface and its basic functionality, take some time to explore what’s possible and the features and data that matter the most for your brand or business. Once you’re ready, you can begin learning about more advanced features — you can keep up-to-date with the latest news by heading to our Google Search Console library page. And, if you need to brush up on your fundamentals or learn more about what GSC can do, check out Google’s Search Console Training playlist on YouTube.

Conclusion

Let us know your thoughts in the comment section below.

Check out other publications to gain access to more digital resources if you are just starting out with Flux Resource.

Also contact us today to optimize your business(s)/Brand(s) for Search Engines