SEO Tools for Excel helps SEO professionals and digital marketers save time creating reports, doing keyword research, and analyzing data from Google Webmaster Tools. The tool is completely free, easy to install in less than 10 seconds, and you don’t need to be an Excel genius to use it.

If you love working with data and are looking for a tool that will help you save hours or days of work, this is the right tool for you.

The best way to learn what SEO Tools for Excel can do is by going through some of the tutorials below or reading the documentation on this site.

How To Install Seo Tools For Excel

Although it is clear that using a software (or “tool”) becomes easier with experience, you still need to learn the basic functionality to start using it. This can be more or less hard based on the complexity of the tool itself and how easy it is to get access to good training materials.

In this article series, I will help you get started with the Microsoft Excel add-on “SeoTools for Excel”.

SeoTools for Excel was created by one of Sweden’s top growth hackers, Niels Bosma and Niels have made sure that all information provided in this article was correct before it was published.

However, to get the most value out of this blog post I would highly recommend you to learn the basics of SEO before we proceed. https://moz.com/beginners-guide-to-seo

What is SeoTools for Excel

Most online marketers and growth hackers have data stored across separate products, tools and services. Some example are web analytics-data, SEO-data, CRM-data and SEM-data. SeoTools for Excel offers a user-friendly method of extracting all this data straight into Excel.

As the name implies, the tool runs as an add-on to Microsoft Excel and it provides you with more than 100 new functionalities within Excel itself.

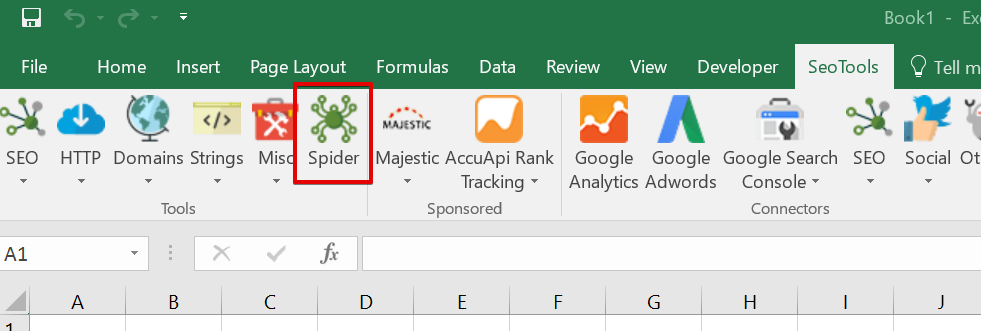

The Spider

One of the main functionalities within SeoTools is the Spider. The Spider is awesome when you want to identify SEO issues on a website such as broken links, duplicate titles, missing meta descriptions and much more.

It is easy to get started with the spider which makes it the perfect functionality for a new SeoTools user to learn first. However, when you dig into the different options it offers, you find that it is a very complex and powerful functionality. Because of this, I have choose to write two separate articles about the Spider functionality. The next article will be more advanced than this one.

How the Spider works

The spider works similar to the way a search engine such as Google does.

First, the spider visit (or “crawl”) the URL of your choice. it identifies all the links on that page and then it goes from link to link and bring data about each web page it visits back into Excel. That data is displayed on an Excel sheet based on the different settings you have set for the Spider prior to the crawl.

Settings

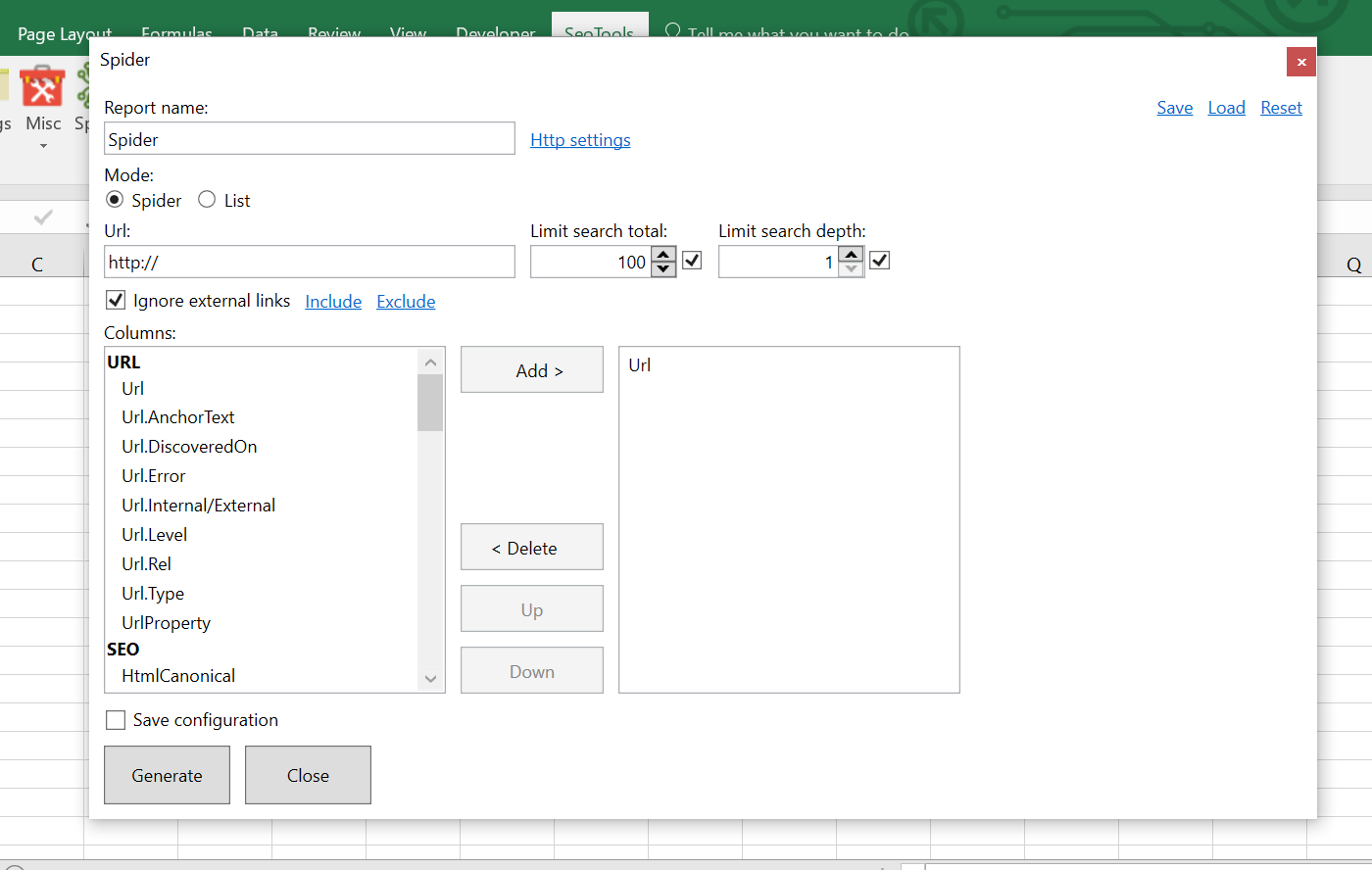

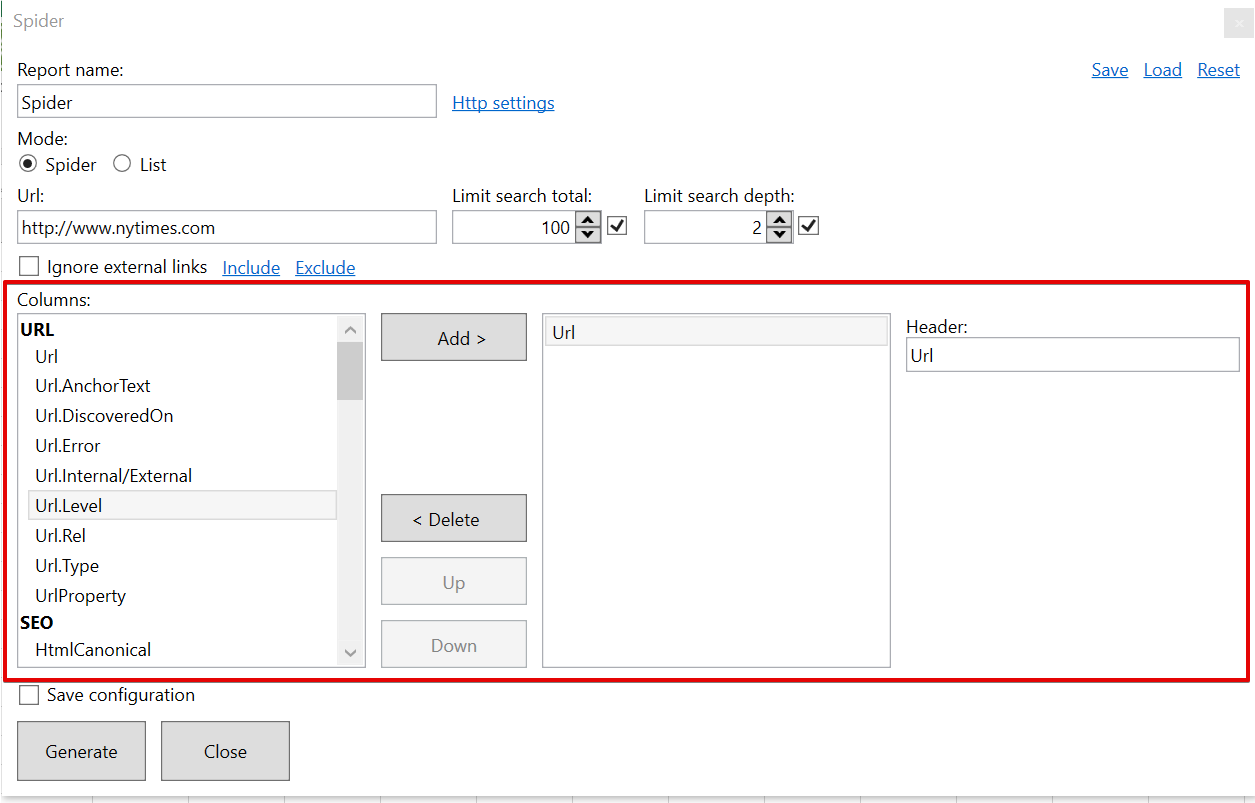

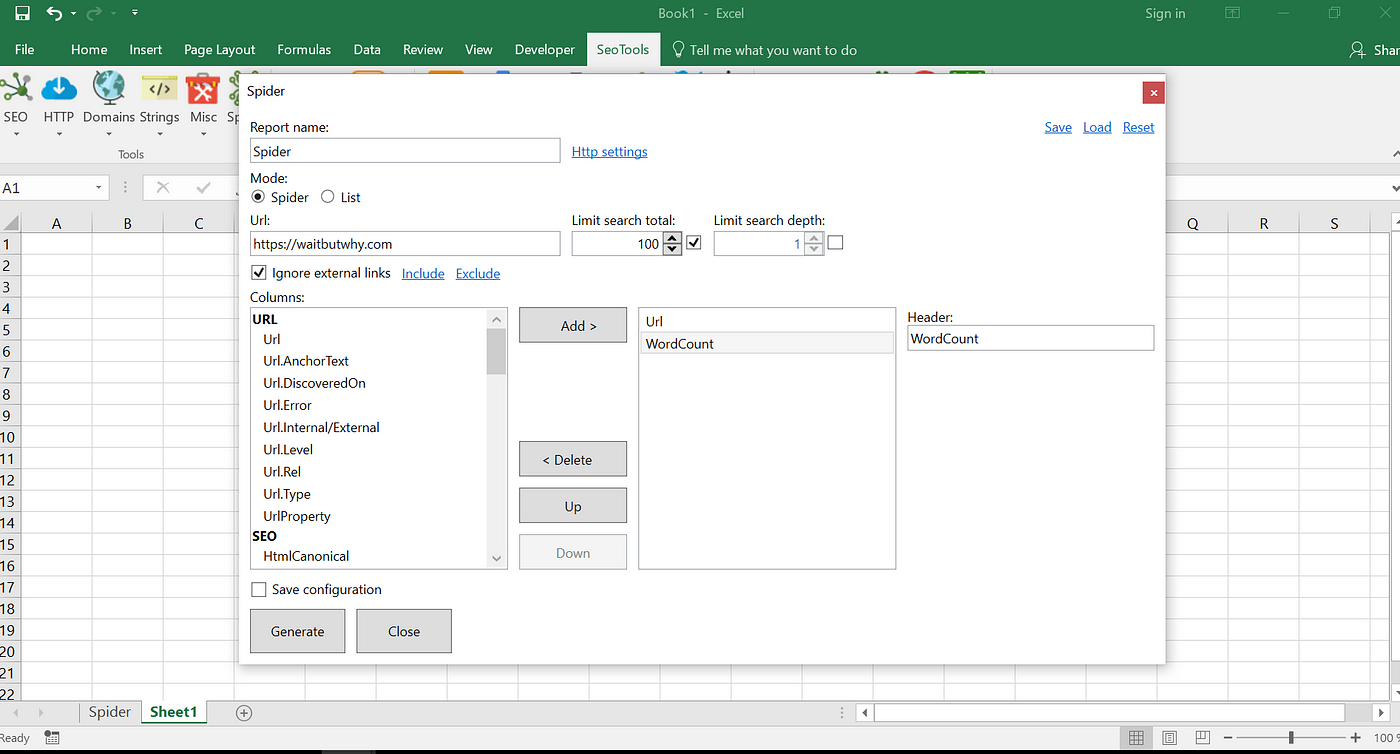

When you open up the Spider, you have a number of different ways to control its behavior. You also have the possibility to choose what data you want it to collect and import to your Excel sheet. Let’s go through each of these settings together.

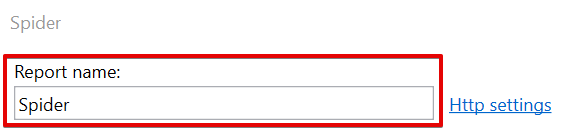

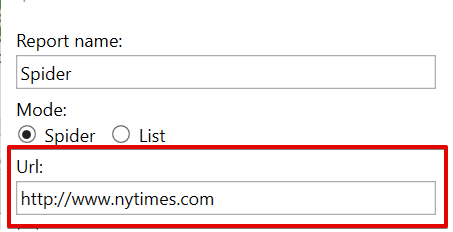

Report name: Here you can give the Excel sheet where the Spider data is imported a name. (we will ignore the “Http settings for now).

Mode: Decide if you want to crawl web pages (Spider) or a local file (“List”). The local list file should be in txt-format and include all the urls you want the spider to collect information about.

Url: The url to the web page we want the spider to start crawling first. In this case, I have given the spider instructions to visit the New York Times start page http://www.nytimes.com and then follow all links from that page.

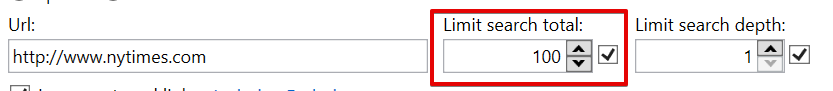

Limit search total: Even if it is possible to crawl a large website with the Spider, it’s still a very memory intensive process. Because of that, Niels recommends you not to crawl over a couple hundred pages per crawl if you want to make sure that Excel doesn’t crash.

However, this depends on how good your computer is and how fast the site that you crawl are. Some trial and error are recommended here to find your perfect settings.

The Limit search total setting makes it possible for you to decide how many pages you want to crawl.

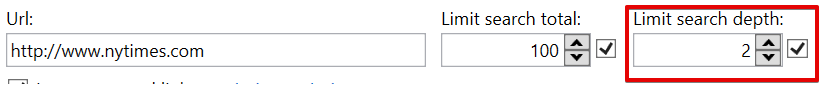

Limit search depth: decide how “deep” you want to crawl in the website structure. In the screenshot below, I have chosen to limit my search depth to 2. This means that I will crawl two layers of sub pages. Using the nytimes.com example, I would crawl http://www.nytimes.com/subpage1/subpage2 but not http://www.nytimes.com/subpage1/subpage2/subpage3

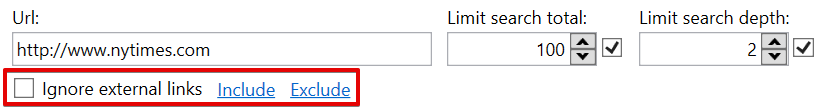

Ignore external links: You use this setting if you don’t want pages from external domains to be included in your crawl. The “Include” link gives you the possibility to use regular expressions to include only certain pages. The “Exclude” link works the same way but gives you the possibility to exclude certain pages.

Choose data to collect in the crawl

This is the section where you choose what data you want the Spider to collect and populate your Excel sheet with.

As you can see on the image above, the different data types are divided into “Columns” and categorized as below

- URL

- HTTP

- SEO

- Connectors

I will only go through the URL and the SEO Columns. The HTTP and Connectors will be addressed in the next article (part 2).

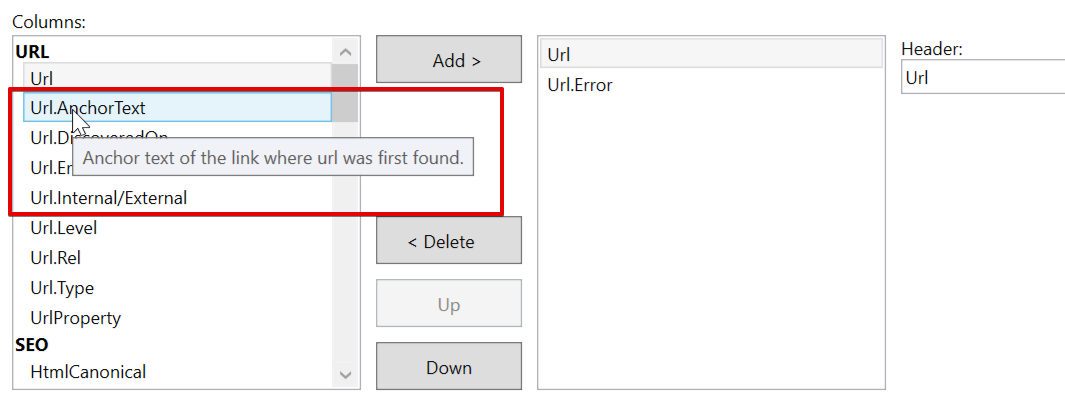

If you hoover over a data type with your mouse, you will see a short description of the data type. It doesn’t work on all of them but most ones.

URL Column

Url: the url of the crawled web page.

Url.AnchorText: The anchor text of the link where the url were first found by the Spider.

Url.DiscoveredOn: The web page where the link to the url was first discovered.

Url.Error: An error catcher, i.e. did the url not respond.

Url.Internal/External: If the web page was found internally or externally.

The page https://www.nytimes.com/video is internal while http://cn.nytimes.com/ is considered external.

Url.Level: How deep (on what level) is the page positioned based on the start page where you started the crawl. If it is on the same level, it is categorized as level 0.

Url.Rel: The REL attribute indicate the relationship of the current resource to the web page it links to. For example, a link to the start page of could have a rel attribute named rel=”home”.

Url.Type: Is the URL classified as Root (the original URL) or as a Link (a link from the original URL).

UrlProperty: The URL property returns the full URL of the current web page.

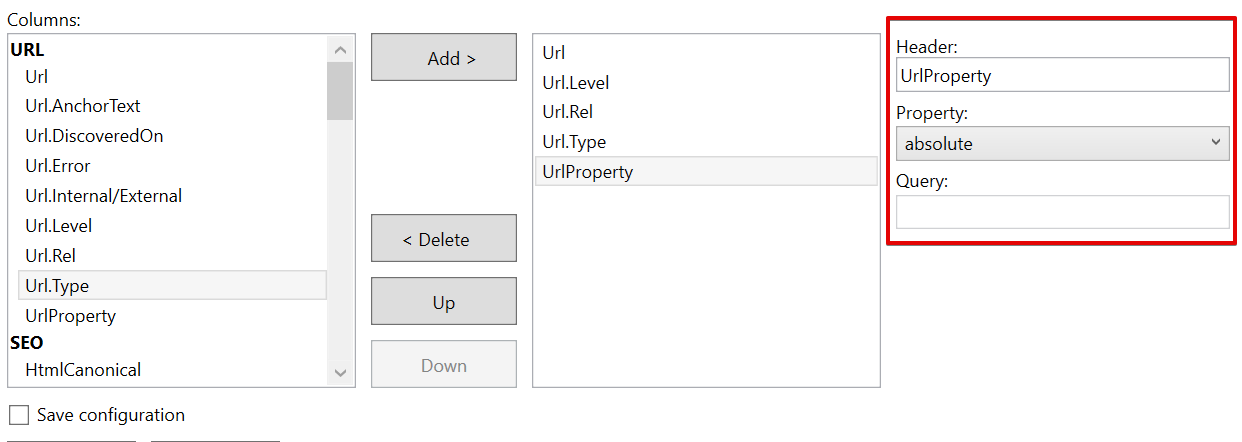

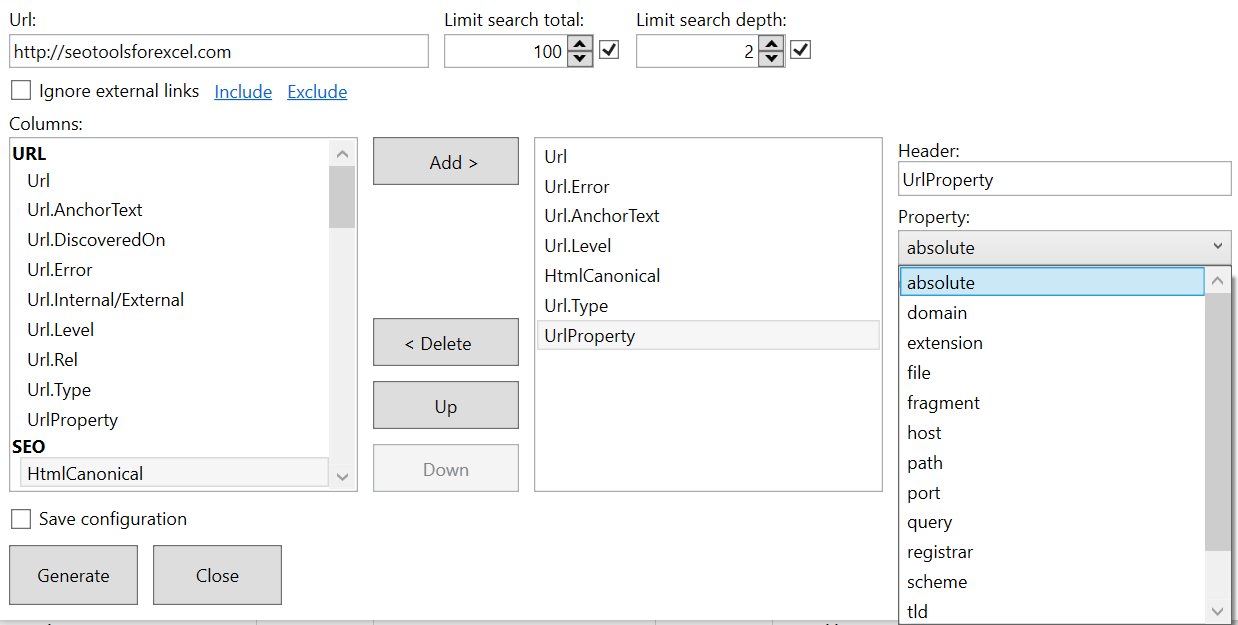

When selected, some data types display an additional setting to the right. This is true for the UrlProperty.

Within this setting, you can find out (filter) a specific property about the URL itself.

If you for example choose domain, you can find out what domain each page belong to.

SEO Column

HtmlCanonical: The rel=canonical element is an HTML element with the purpose to prevent duplicate content issues.

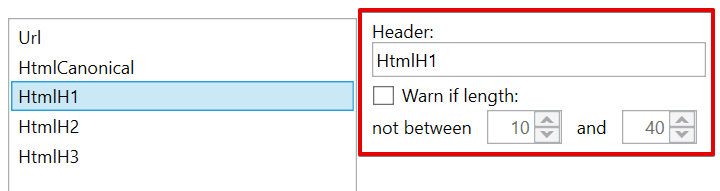

HtmlH1: The H1 is an HTML tag that indicates the most important (or highest level) heading on the page.

To the right, you can find an additional an setting where you can choose to get warned if the length of the H1 tag exceeds a certain number of characters.

An ideal H1 tag from an SEO perspective should be somewhere between 20–70 characters. However, this is not as important now as it was earlier. It’s better to focus on a well-written H1 tag with your main keyword included. Just make sure not to copy you title tag.

HtmlH2: The H2 is an HTML tag that indicates the second most important (or second level) heading on the page. To the right, you have the option to get warned if the length of the H2 tag exceeds a certain number of characters.

HtmlH3: The H3 is an HTML tag that indicates the third most important (or third level) heading on the page. To the right, you have the option to get warned if the length of the H3 tag exceeds a certain number of characters.

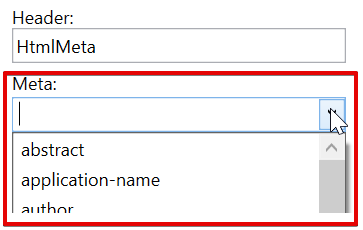

HtmlMeta: The meta tag provides metadata about the page HTML document. Metadata is not displayed on the page, but will be read by for example Google when their bot crawl your page.

Meta elements are typically used to specify page description, keywords, author of the document, last modified, and other metadata.

— https://www.w3schools.com/tags/tag_meta.asp

To the right, you have the option to choose what metadata to bring into Excel.

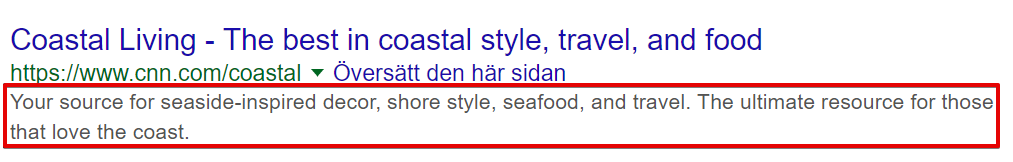

HtmlMetaDescription: The meta description of the web page. Its main purpose is to get the visitor from Google to click on your link in the search results.

The meta description should ideally be between 30 to 160 characters. If it is longer than 160 characters, the search engines generally truncate snippets which is not ideal for the user looking to find more information about the content of the page.

In the additional setting to the right, you have the option to get warned if the length of the meta description exceeds a certain number of characters which you specify.

HtmlMetaKeywords: The meta keywords of the page. Not as important as it used to be from an SEO perspective. In the additional setting to the right, you have the option to get warned if the length of the meta keywords exceeds a certain number of characters which you specify.

HtmlMetaRefresh: Meta refresh is a method of instructing a web browser to automatically refresh the current web page or frame after a given time interval, using an HTML meta element with the http-equiv parameter set to “refresh” and a contentparameter giving the time interval in seconds. — https://en.wikipedia.org/wiki/Meta_refresh

HtmlTitle: The title tag of the page.

A title tag is an HTML element that specifies the title of a web page. Title tags are displayed on search engine results pages (SERPs) as the clickable headline for a given result, and are important for usability, SEO, and social sharing. The title tag of a web page is meant to be an accurate and concise description of a page’s content. — https://moz.com/learn/seo/title-tag

An ideal title tag should be between 50–60 characters but it’s not that exact.

In the additional setting to the right, you have the option to get warned if the length of the title tag exceeds a certain number of characters which you specify. I usually make sure that it is between 20–60 characters.

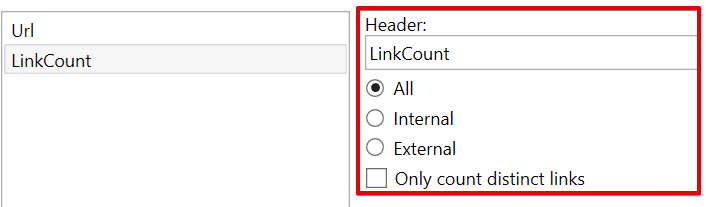

LinkCount: The number of links present on the page.

In the additional setting to the right, you have the option to choose what type of links to count, i.e. internal or external.

You also have the possibility to only count distinct links, i.e. make sure that links to a page only counts once. An example, if nytimes.com link to weather.com 5 times, you can make sure that it is only counted as one unique link.

PageCodeSize: Page code size in bytes.

PageCodeToTextRatio: Page code to text ratio.

PageEncoding: The encoding of the page, for example utf-8.

PageSize: Page size in bytes.

PageTextSize: Page text size in bytes.

WordCount: Number of words present on the page.

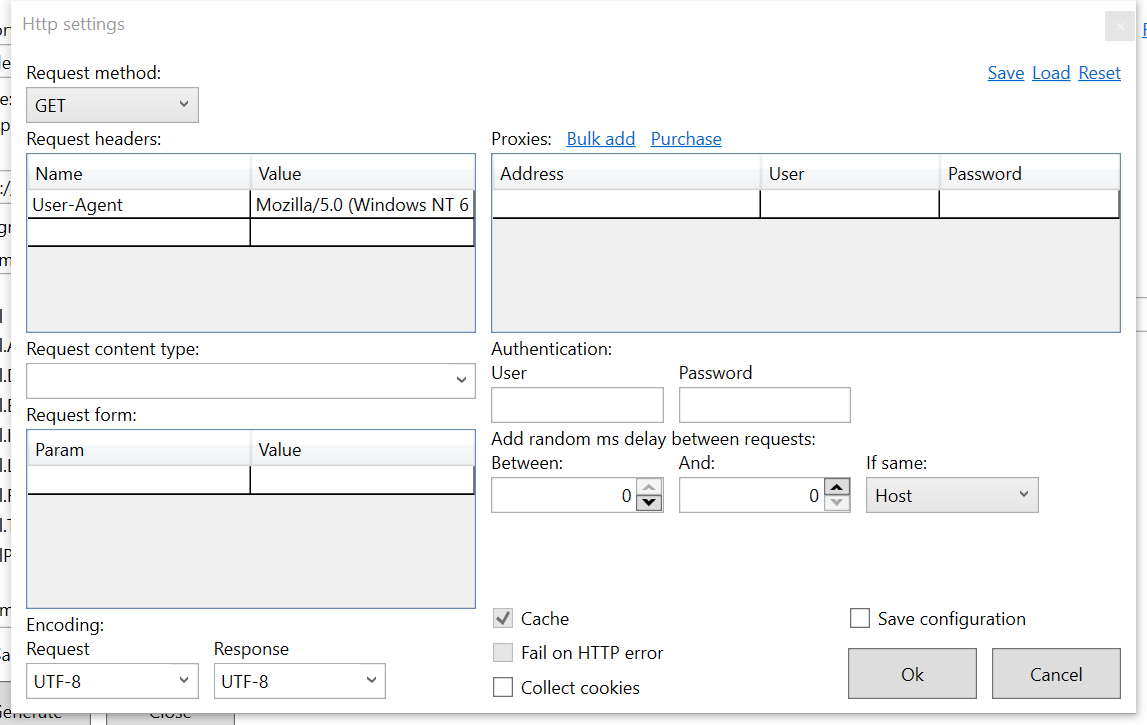

Http settings

Next to the Report name, can find a link named Http settings.

Within the http setting, you can make more advanced changes to how you want the Spider to function.

Day-to-day usage

We have looked at quite a few different functions within the Spider functionality of SeoTools. Now it’s time to give some examples on how you can use these functions in your daily job as a growth hacker/online marketer.

Google Search Engine Results Page (SERP)

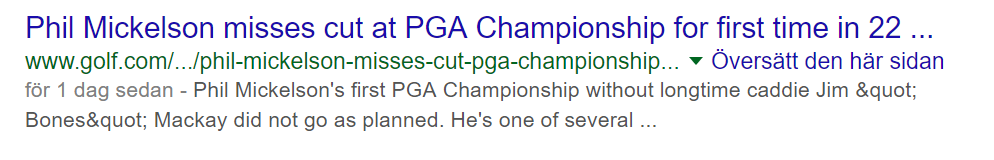

Let’s pretend that we want to make sure that the website golf.com is displayed properly for the user in the SERP and for Google when crawling the site.

We want to start by assuring that the H1 tag, the title tag and the meta description tag is present on all pages and that they are not to long.

For this example, we will only crawl the first 100 pages of golf.com and limit the search depth to one.

Let’s set up the Spider according to best practice and start the crawl.

The scrape is now done. Let’s take a look at the result.

We can see that a lot of pages are missing a H1-tags. Most authorities within SEO consider the H1-tag to be an important ranking factor so it’s not ideal that those are missing on these pages. It might not matter to much on an authority site such as Golf.com but for a smaller site, it could make a huge difference in terms of Google ranking.

We can also see that some pages have meta descriptions which are too long. If we look at those pages in Google, we can see that they have been truncated and hence, the meta description might not offer me a good explanation on what the page (in this case a blog post) is about.

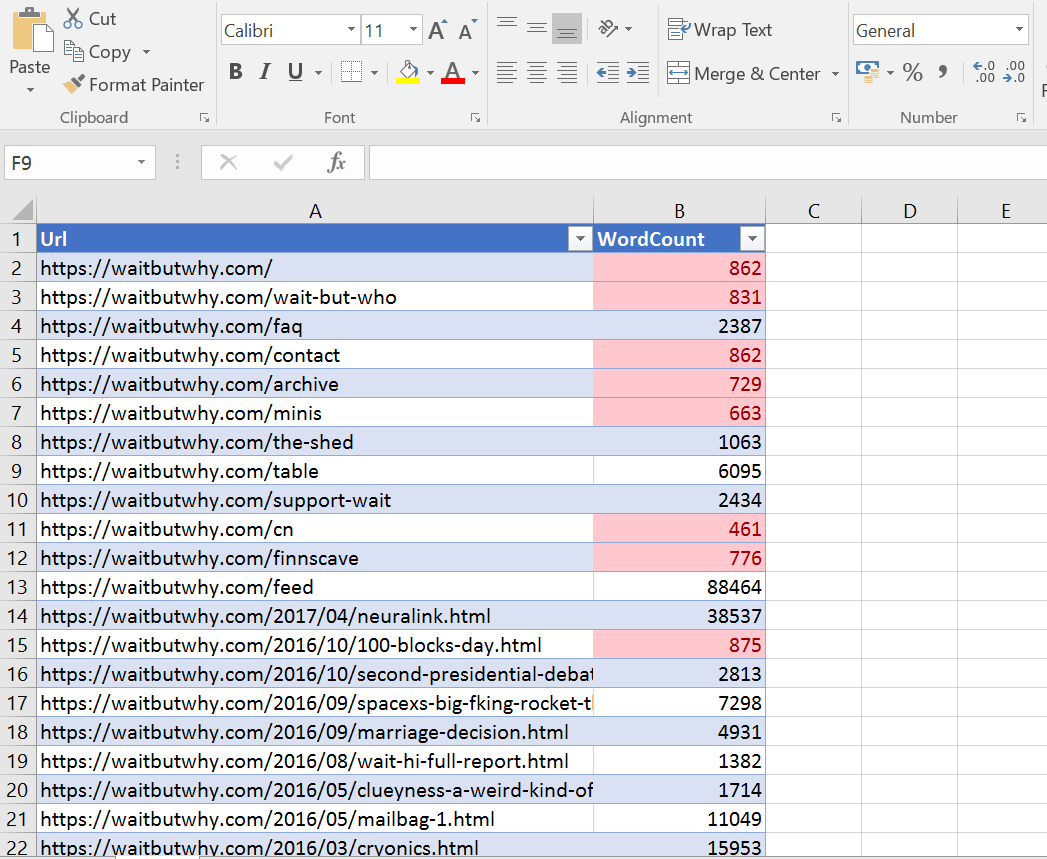

Find pages with thin content

Perhaps you have heard the saying that content is king? This is not only true from a user perspective. Google has said that “content” is among its top three ranking factors.

Test after test prove this true by showing that long content (1,000+ words) tends to rank better in organic results. SEOTools can help you identify thin content, i.e. pages where you need to add more content.

Let’s take a look at Wait But Why, one of my favorite blogs. Wait But Why is covering a lot of different topics, including artificial intelligence, outer space, and procrastination. Let’s use the spider together with the WordCount functionality and see if we can find any pages with few words.

Using some conditional formatting, it’s easy to identify pages with less than 1000 words. Wait But Whys main format are long-form blog posts so most pages have a lot of content in them but on many sites, tons of important pages have been neglected in terms of amount of content.

Final Remarks

I hope that this blog post has given you an idea of how powerful the spider functionality within SEOTools are and how you can start using it most effectively.

In the next blog post, I will go through the more advanced features of the Spider found in the HTTP and Connectors columns.

You will among other things learn how to scrape information from any website, make sure that your Google Analytics code is present on all pages and find out the number of Twitter and LinkedIn shares your pages have received.

free seo tools

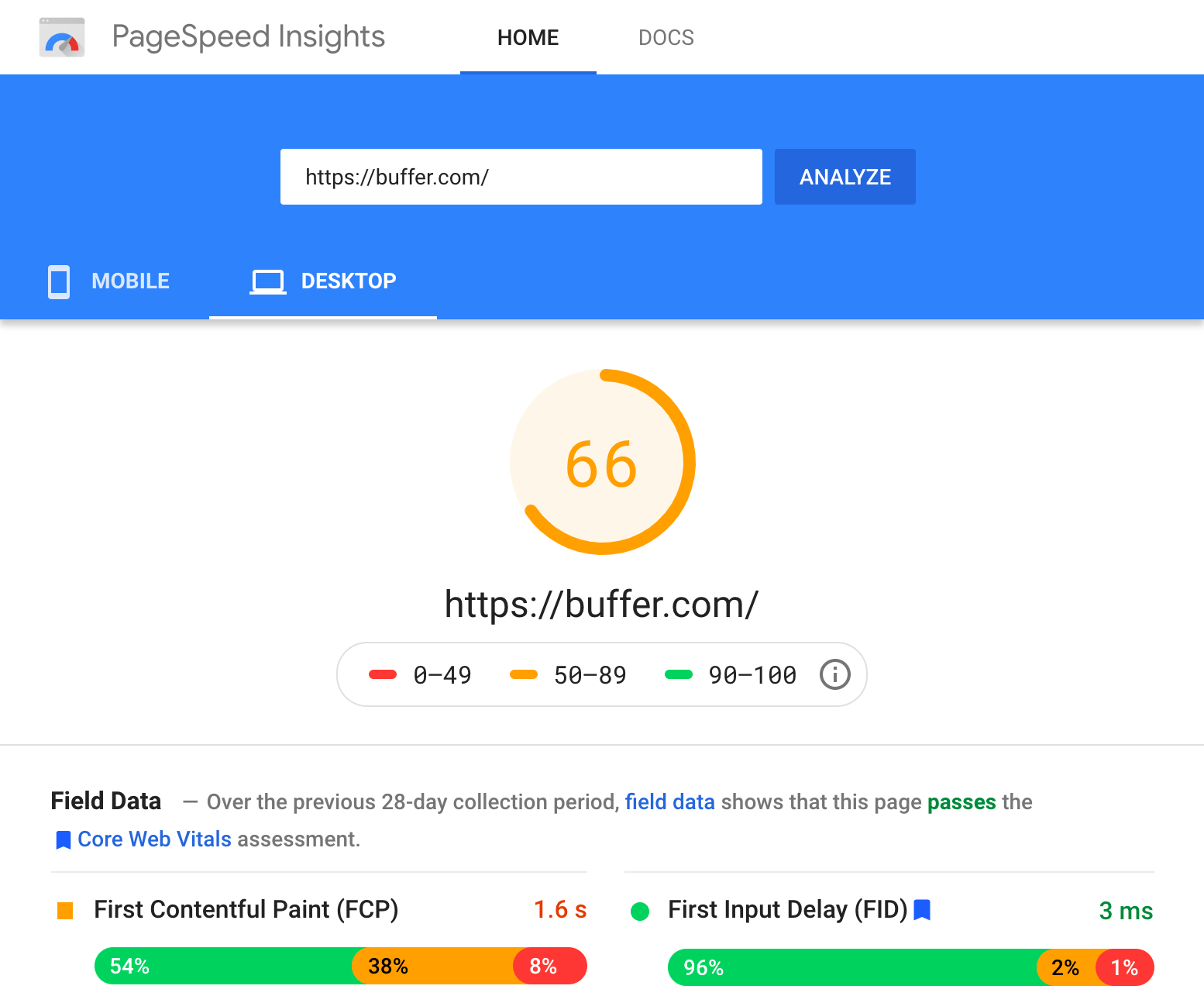

1. Google PageSpeed Insights

Check the speed and usability of your site on multiple devices

Limitations: None

Enter a URL and this tool will test the loading time and performance for that URL on desktop and mobile. It then grades your site’s performance on a score from 0 – 100. It tells you exactly how fast it takes to load the site according to different metrics, and also suggests areas for improvements.

Alternatives:Pingdom, WebPageTest, and GTMetrix

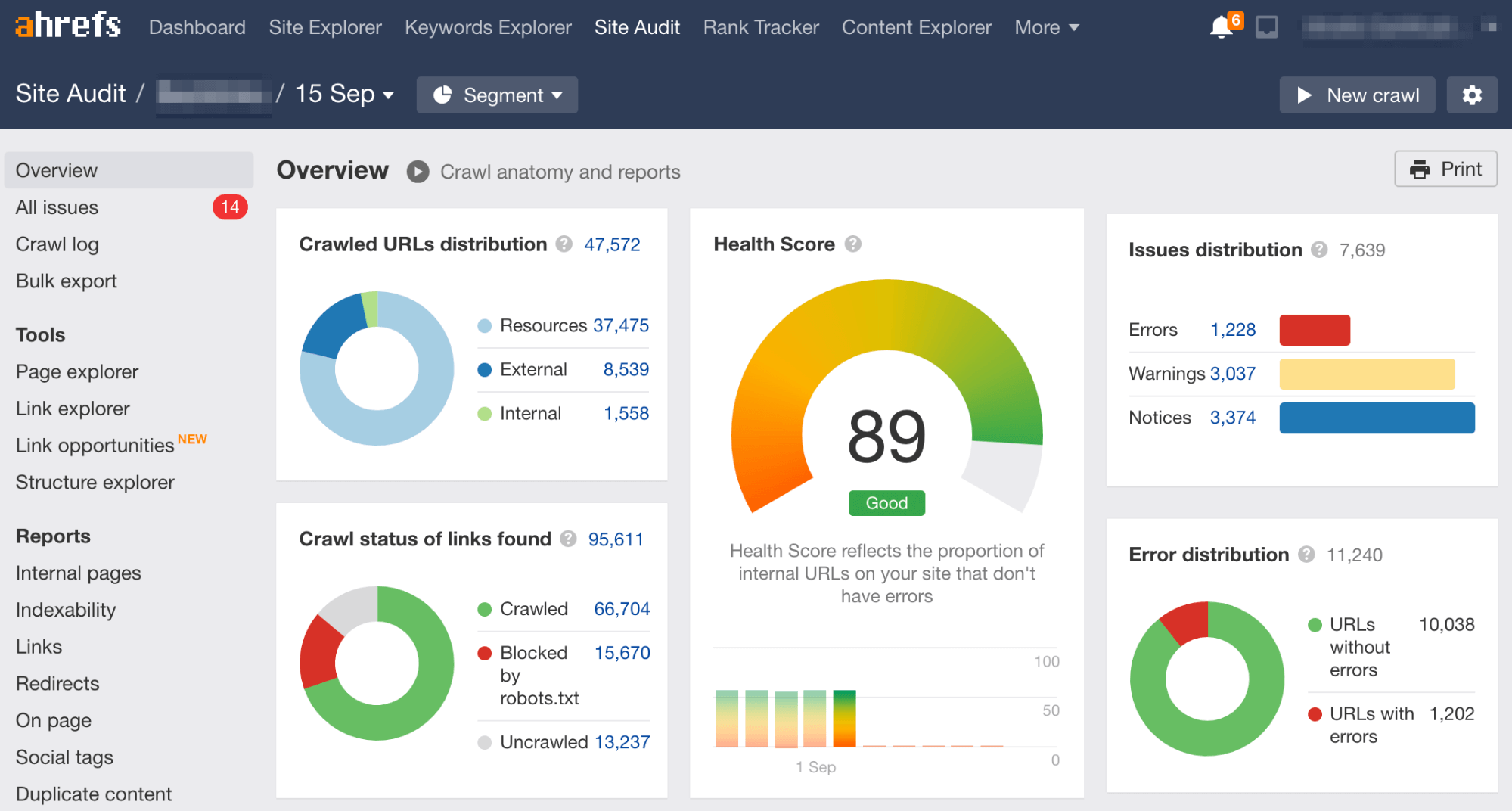

2. Ahrefs Webmaster Tools

Run a technical audit of your site

Limitations: 5,000 crawl credits per project per month

Sign up for Ahrefs Webmaster Tools, verify your website and you’ll be able to audit your website for over 100+ technical SEO issues. The tool also gives suggestions on how to fix them.

After running an audit, it also suggests areas where you can improve your internal linking, which is helpful in boosting your rankings in search engines.

This tool also allows you to see your site’s organic keyword rankings as well as who’s linking to you.

Alternatives:Screaming Frog (audit), Beam Us Up (audit)

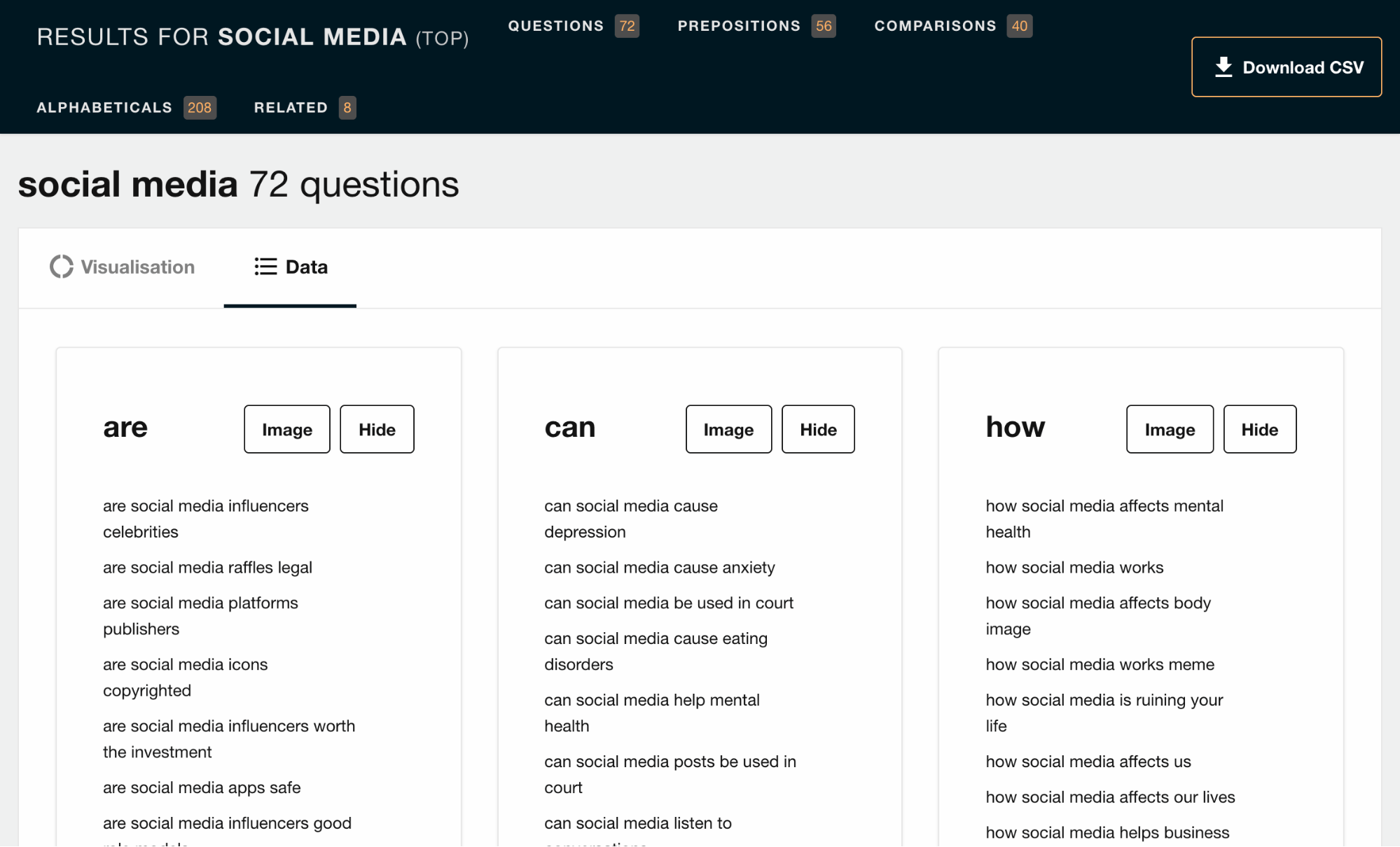

3. Answer the Public

Hundreds of keyword ideas based on a single keyword

Limitations: Two free searches per day

Enter any relevant keyword, and Answer the Public will provide a huge list of long-tail keyword opportunities, plus common questions asked.

Alternatives: KeywordTool.io, UberSuggest, Keyword Sheeter, Keyword Generator

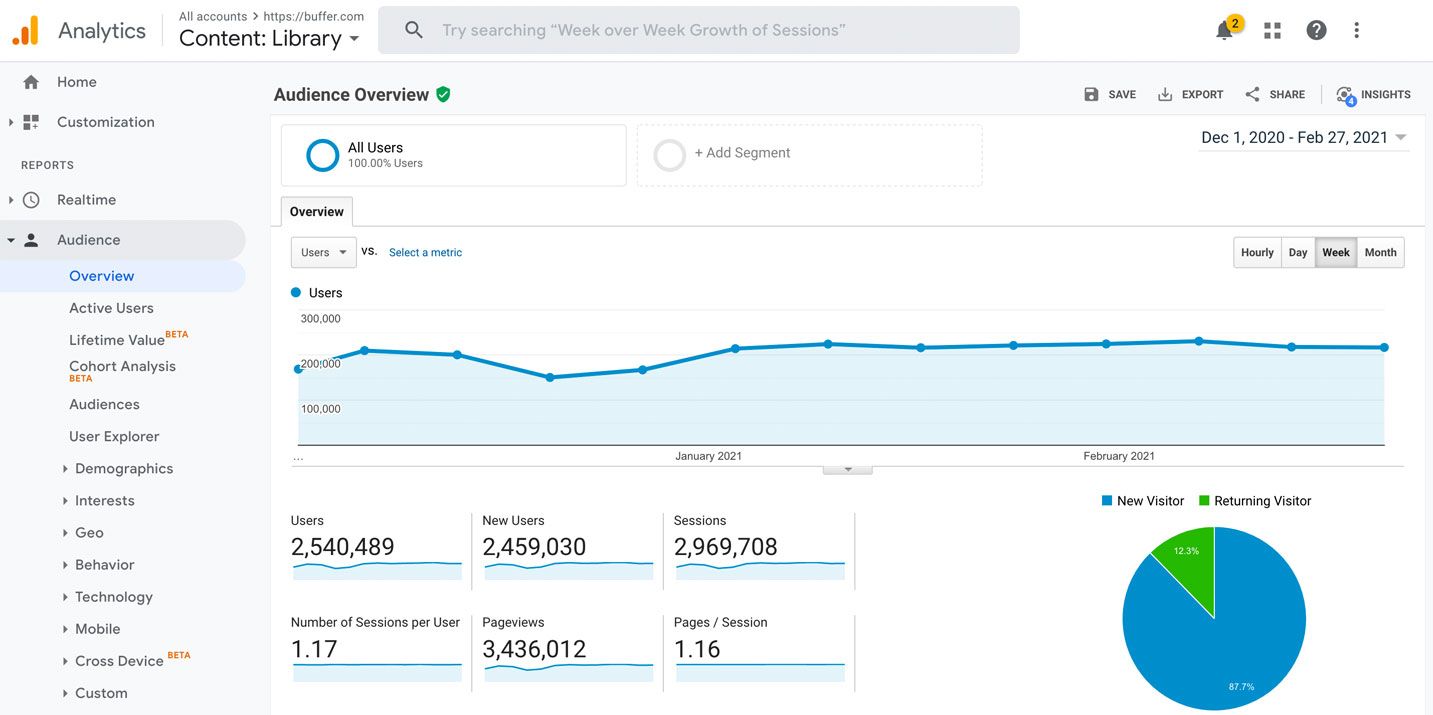

4. Google Analytics

Complete web stats and search insights

Limitations: No limitations for its usage, but queries that are sending you organic traffic are hidden

Quite possibly the most powerful free analytics tool available, Google Analytics tracks pretty much every bit of traffic you can imagine on your website—where it comes from, which page is receiving it and so on.

While it’s not purely for SEO, it’s still a helpful tool to track if you’re getting traffic from organic search.

However, Google Analytics has since stopped showing which keywords are sending you those traffic. You’ll have to pair it with a tool like Keyword Hero to uncover what’s behind “(not provided).”

Alternatives:Matomo, Open Web Analytics, and Clicky

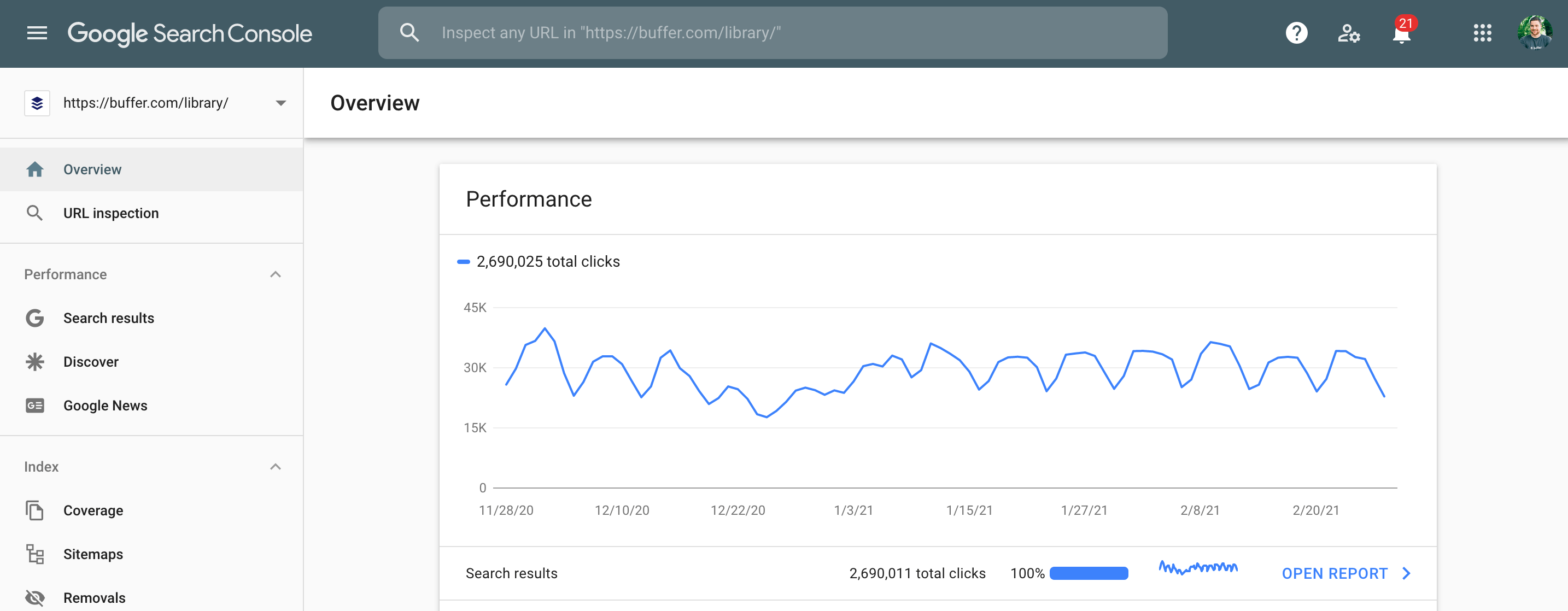

5. Google Search Console

Constant website analysis, alerts, and error reports

Limitations: Only shows a handful of technical SEO issues, the top 1,000 backlinks and top 1,000 organic keywords

Google Search Console gives you a taste of what the most used search engine thinks of your website. You can use it to check and fix technical issues on your website, see important SEO data like clicks, impressions and average ranking position, submit sitemaps and more.

If ranking in search engines like Bing and Yandex are important to you, then take note that they have their own “search console” too.

Alternatives:Bing Webmaster Tools, Yandex Webmaster Tools

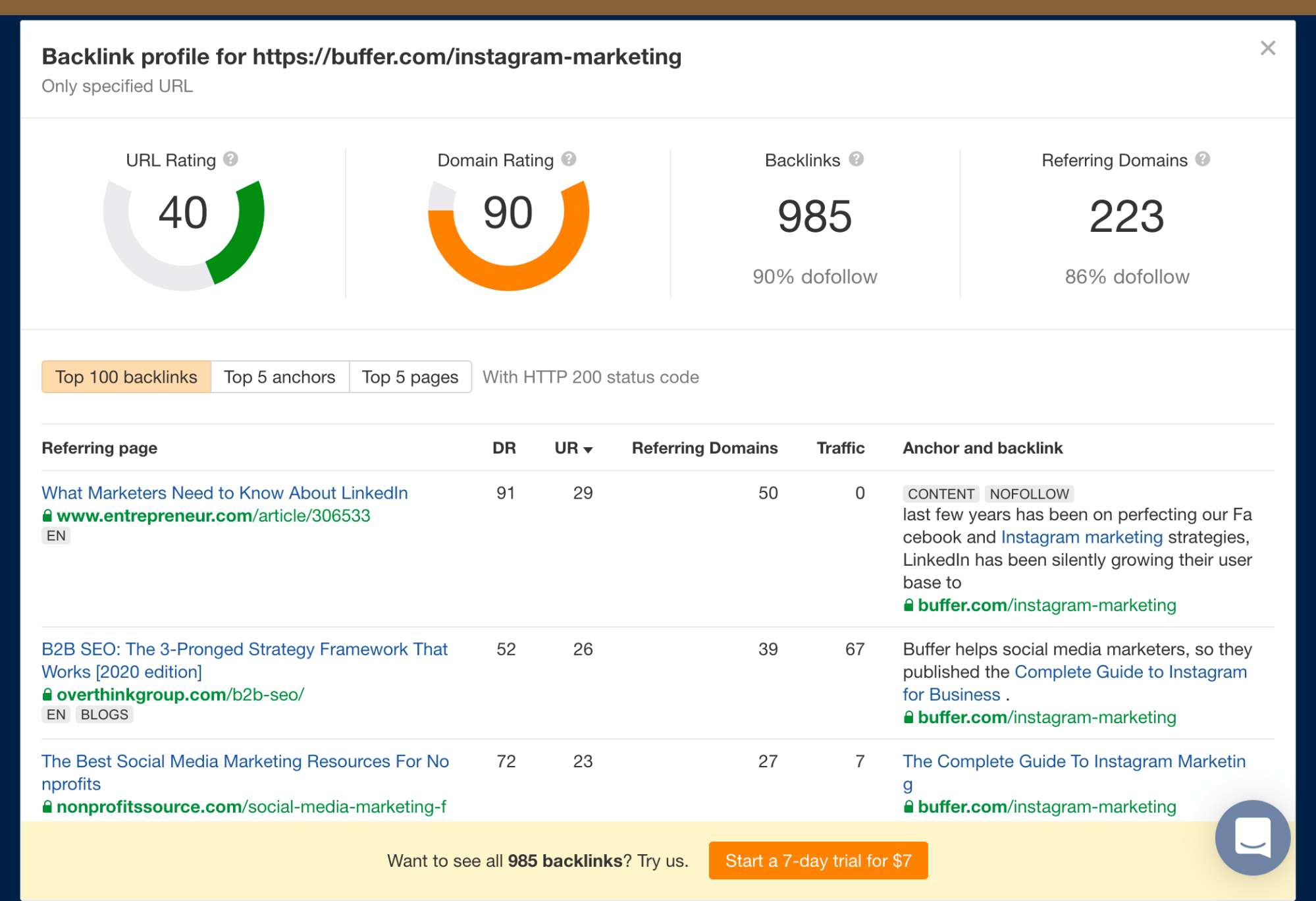

6. Ahrefs’ Backlink Checker

Comprehensive link analysis

Limitations: Free for the top 100 backlinks

The free version of Ahrefs’ Backlink Checker shows the top 100 backlinks to any website or URL, along with the total number of backlinks and referring domains (links from unique sites), Domain Rating (DR), and URL Rating (UR) where applicable.

A great way to use this tool is to paste your competitor’s website and find potential link building opportunities.

Alternatives: Moz Link Explorer

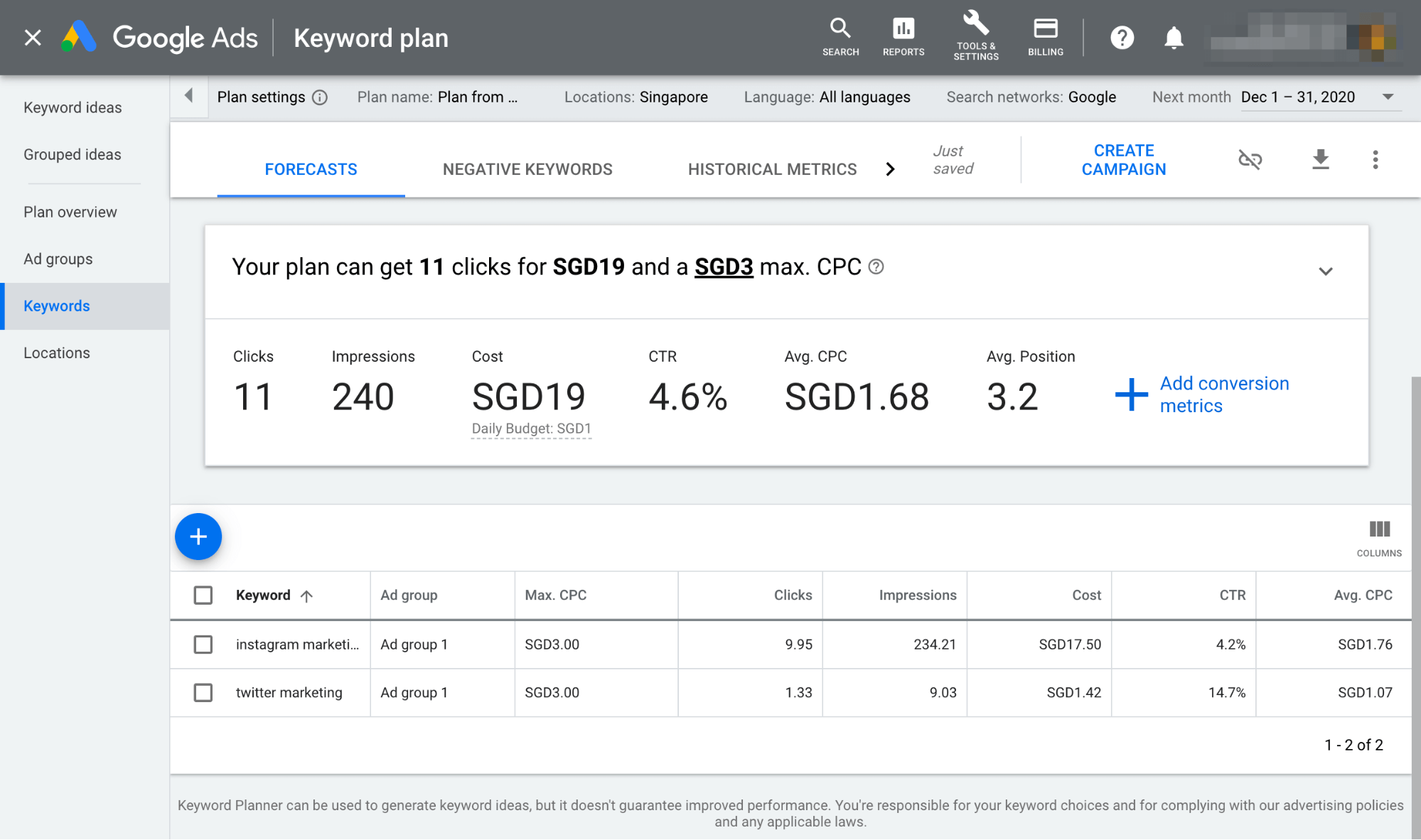

7. Google Ads Keyword Planner

Know what people search for

Limitations: You’ll need to run an ad campaign to see exact search volumes

Enter a keyword or group of keywords into the tool, and Google Keyword Planner will return all sorts of helpful stats to guide your keyword strategy: monthly search volume, competition, and even suggested terms you might not have considered.

Alternatives:Bing Keyword Planner

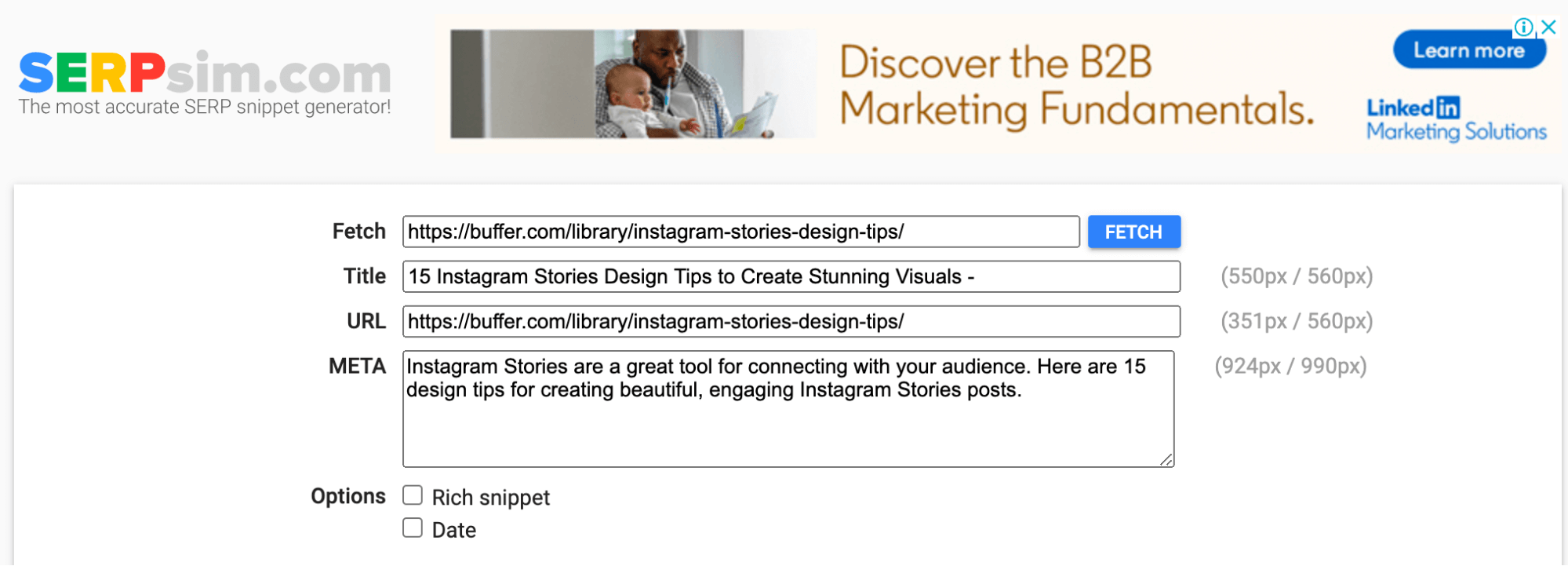

8. SERPSim

Preview how your web pages will look in Google’s search results

Limitations: None

See how your meta title and description will appear in the search results before you even publish your web page. Works for desktop and mobile.

Check for truncation issues and fix them instantly.

Alternatives: Portent’s SERP Preview Tool

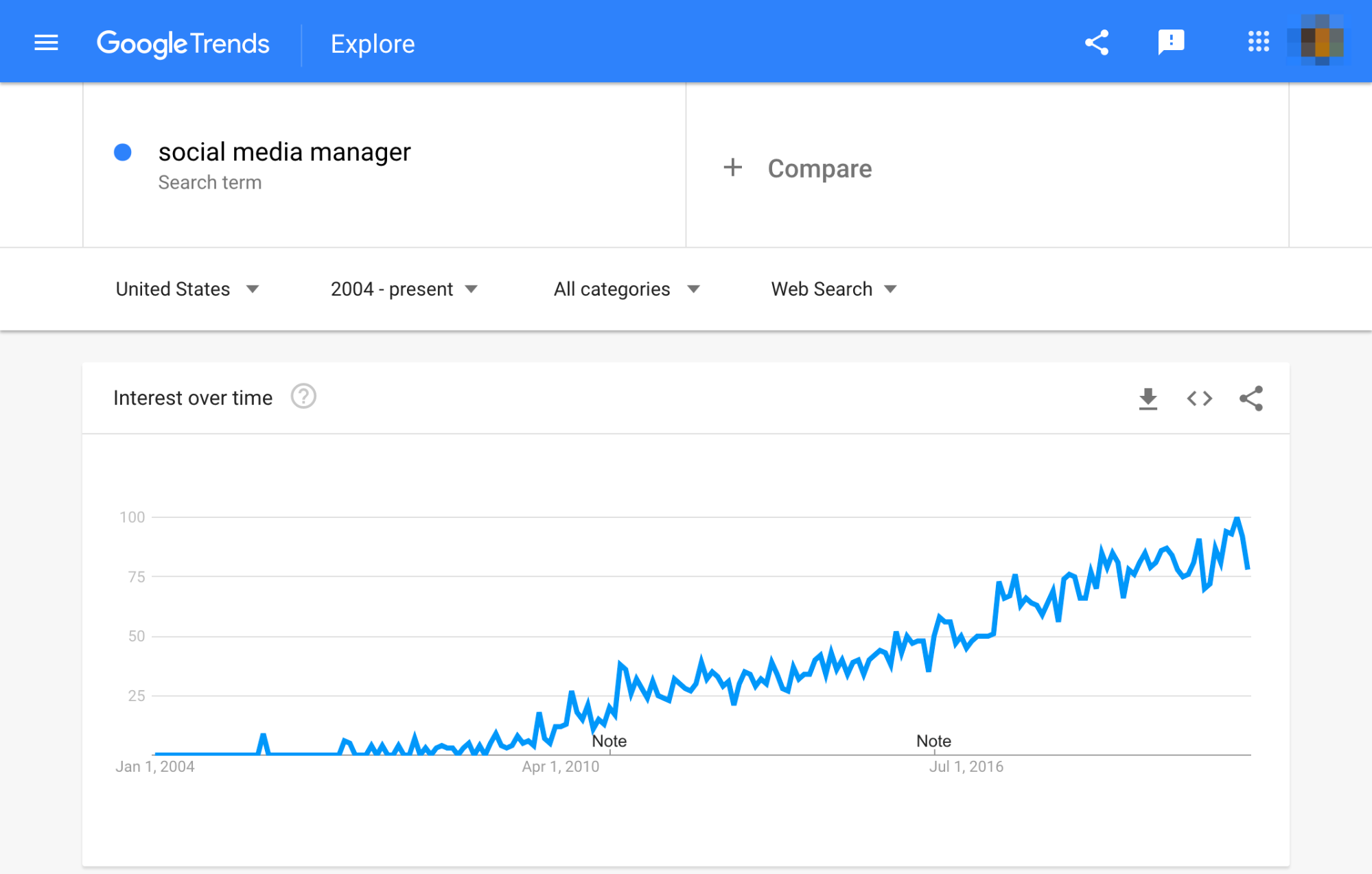

9. Google Trends

See the relative search popularity of topics

Limitations: None

Google Trends shows the popular search terms over time, which is useful for uncovering seasonal variations in search popularity amongst other things. Compare multiple terms to see the relative popularity.

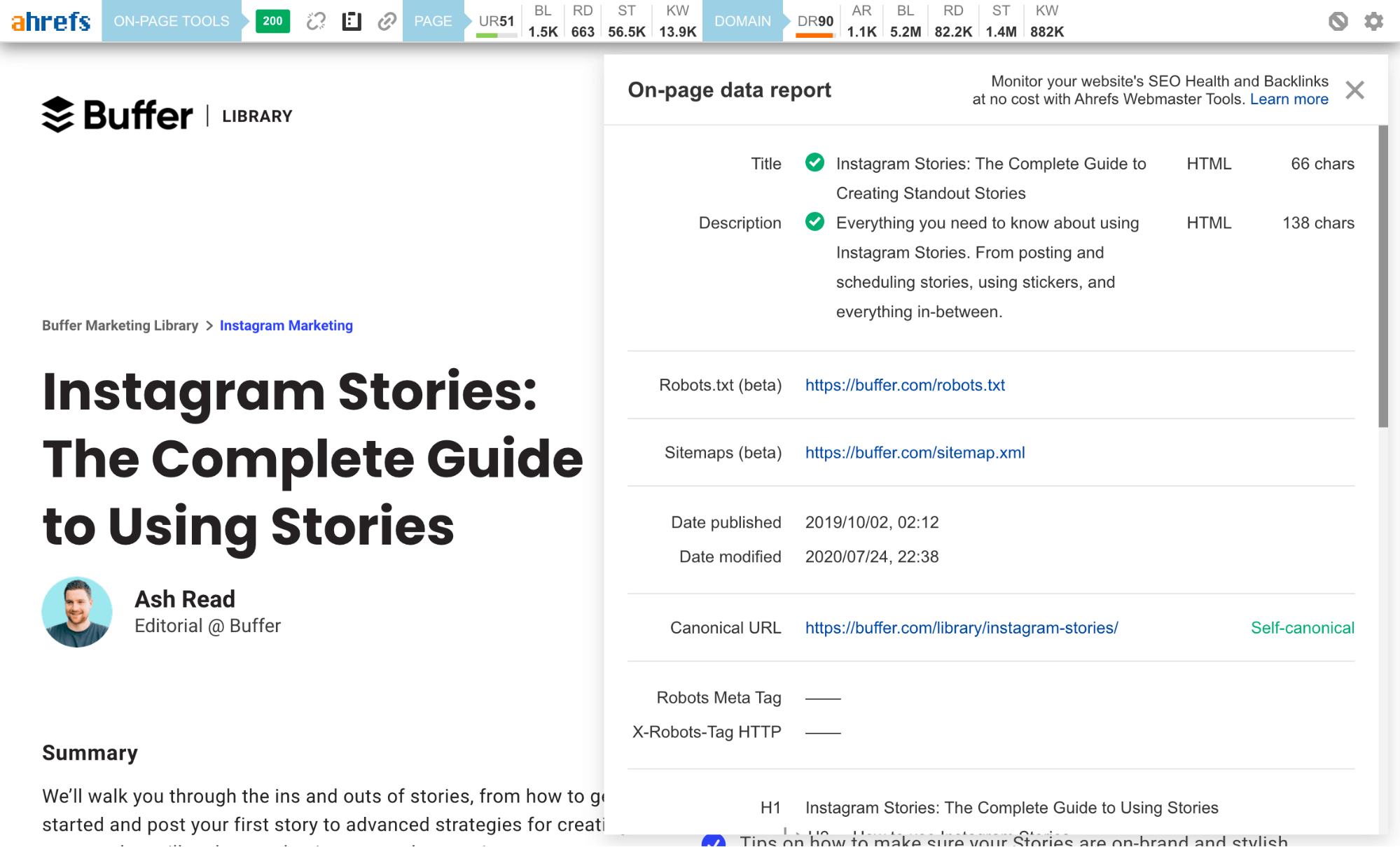

10. Ahrefs’ SEO toolbar

Check the broken links, redirect chains, nofollow links and on-page elements for any webpage

Limitations: Technical and on-page SEO features are free, but you’ll need an Ahrefs account to see SEO metrics within the SERPs

The Ahrefs SEO toolbar is a free Chrome and Firefox extension that allows you to check for broken links, trace redirect chains and highlight nofollow links for any webpage. It also generates an on-page SEO report that includes the webpage’s:

- Title

- Meta description

- Word count

- Headers

- Hreflang tags

- Canonicals

- OG tags

This makes analyzing any page much easier and faster.

If you have access to a paid Ahrefs account, you’ll also be able to see important keyword metrics like search volume, CPC and keyword difficulty within the SERPs.

Alternatives:Detailed SEO Extension, SEO Minion, LinkMiner (broken links), Ayima Redirect Path (redirect tracing)

Conclusion

Let us know your thoughts in the comment section below.

Check out other publications to gain access to more digital resources if you are just starting out with Flux Resource.

Also contact us today to optimize your business(s)/Brand(s) for Search Engines